ARPEGGIO: Advanced Robotic Perception for Mapping and Localization(Sep’21–Feb’25)

National Project

National Project

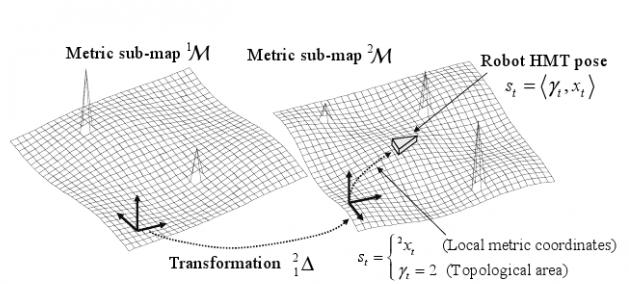

Mapping and localization (M&L) are two fundamental pillars to achieve effective, intelligent mobile robots. Though conceptually these two problems are now well understood, and good realizations are available to the robotics community, critical issues are still to be solved to reach the degree of robustness and dependability that real applications demand. This project will investigate improvements of existing methods as well as novel approaches toward this aim from both the geometric and the semantic perspectives. At the geometric level, we will investigate:

- Appearance-based M&L, a challenging approach that models the environment with a collection of geo-tagged holistic image descriptors from which localization is performed through the regression of a query image descriptor with a subset of such map.

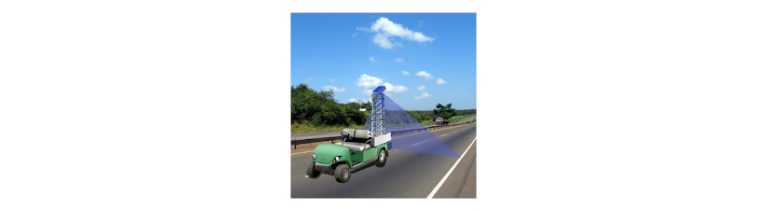

- Direct, dense registration methods to exploit the new generation of digital and solid-state 3D Lidars.

- Mathematical tools to guarantee the optimality of the solutions computed at the back-end of a variety of M&L subproblems (relative pose between cameras, 3D point registration, or graph-pose optimization).

As for the semantic standpoint, the robot will adopt an active vision strategy to gain effectiveness and efficiency when building semantic models of the robot environment.

REFERENCE: PID2020-117057GB-I00

FUNDED BY: Ministerio de Ciencia e Innovación

PERIOD: Sep 2021 – Aug 2024 (extended to Feb 2025)

PRINCIPAL RESEARCHER: JAVIER GONZÁLEZ-JIMÉNEZ

INSTITUTION: Unversity of Málaga

Publications

Publications in the scope of this project: