WISER: Building and exploiting semantic maps by mobile robots(Jun’18–Dec’20)

National Project

National Project

The purpose of project is to provide the robot with the capability to understand the environment by building object-based maps (i.e. semantic maps) that can latter be used to carry out high-level tasks. Thus, it will work on semantic SLAM with the focus on different challenging, open issues.

The recognition of objects from the environment is an esential task for a robot to perform intelligently and for interacting with people. In real conditions this becomes a very dificult problem due to the number of possible object classes, as well as the poor adquisition conditions that the robot may encounter: occlusions, changing lighting conditions, very different apperances for 3D objects, etc. To face the complexity of the problem, we can take advantage of the possibilities that a robot offers, including active perception (i.e, to plan viewpoints that enhance the objetc recognition), and to use all the information that it may have about the workspace, like a map built previously.

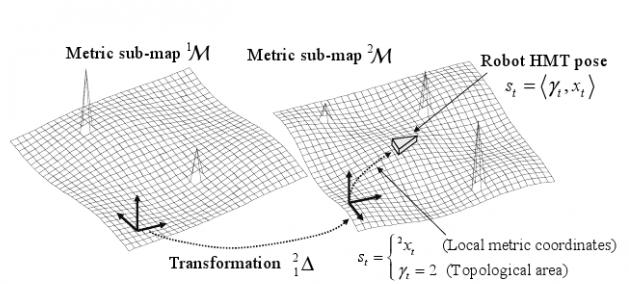

To create an useful object-based model of the environment, not only the robot needs to recognize objects, but to compute their poses in the world, that is, with respect to itself while keeping a perfect self-localization. Moreover, these objects must be linked to relevant information like their properties and functionality, commonly called semantic information.

The purpose of this project is to advance in this direction by providing the robot with the capability to understand the environment by building object-based maps (i.e. semantic maps) that can latter be used to carry out high-level tasks. Thus, we will work on semantic SLAM (Simultaneous localization and mapping) with the focus on:

- Developing algorithms for object recognition and pose estimation, particularly by resorting on CNN (Convolutional Neural Networks).

- The integration and maintainance over time of the semantic information along with the topological, the geometrical and the appearance.

- The investigation of mechanisms for active perception to overcome occlusion and ambiguity situations.

- The exploitation of the semantic maps for assisting people (e.g. finding objects).

The sensorial system to be used comprises one or several RGB-D cameras onboard the robot, thus effective and quick autocalibration procedures will be developed.

REFERENCE: DPI2017-84827-R

FUNDED BY: Ministry of Economy and Competitiveness

PERIOD: Jan 2018 – Dec 2020

PRINCIPAL RESEARCHER: JAVIER GONZÁLEZ-JIMÉNEZ

INSTITUTION: University of Málaga

Publications

Publications in the scope of this project: