Scene Flow

Scene flow is the semi-dense or dense 3D motion field of a scene that moves completely of partially with respect to a camera.

The potential applications of scene flow are numerous. In robotics, it can be used for autonomous navigation and/or manipulation in dynamic environments where the future distribution of the surrounding objects need to be predicted. Besides, it could complement and improve state-of-the-art Visual Odometry and SLAM algorithms which typically assume to work in rigid or quasi-rigid enviroments. On the other hand, it could be employed for human-robot or human-computer interaction, as well as for virtual and augmented reality. In general, it would be useful at any case where motion capture / analysis were required.

Traditionally, scene flow has been computed from image pairs coming from stereo cameras, where both the disparity and the motion need to be estimated. However, the new RGB-D cameras changed this trend since they provide registered intensity and depth images which can be employed to estimate 3D motions accurately (for short-medium distances < 5 meters). In our work, we propose several algorithms to estimate scene flow with RGB-D cameras.

Real-time RGB-D Scene Flow

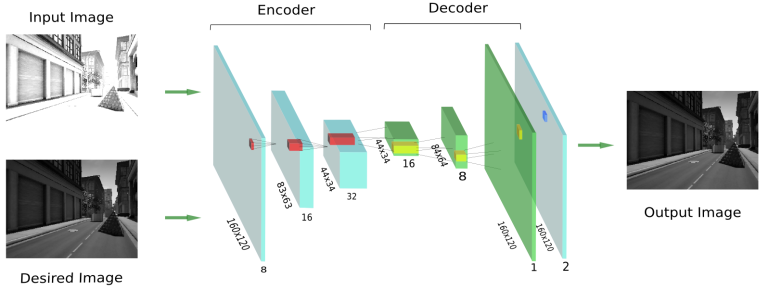

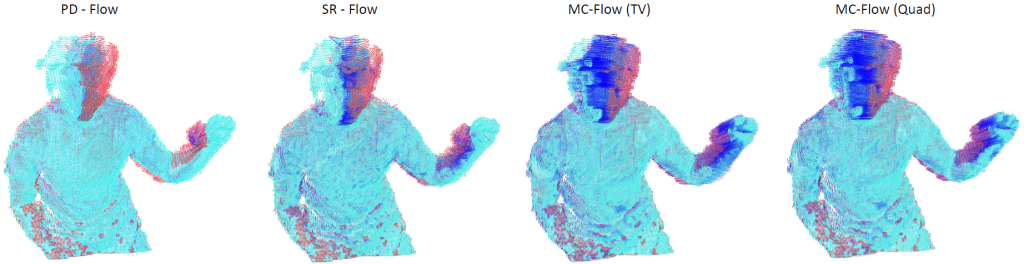

We have developed the first dense real-time scene flow for RGB-D cameras. Within a variational framework, photometric and geometric consistency is imposed between consecutive RGB-D frames, as well as TV regularization of the flow. Accounting for the depth data provided by RGB-D cameras, regularization of the flow field is imposed on the 3D surface (or set of surfaces) of the observed scene instead of on the image plane, leading to more geometrically consistent results. The minimization problem is efficiently solved by a primal-dual algorithm which is implemented on a GPU. This work was developed in conjuction with the CVPR group (TUM).

Segmentation from Motion

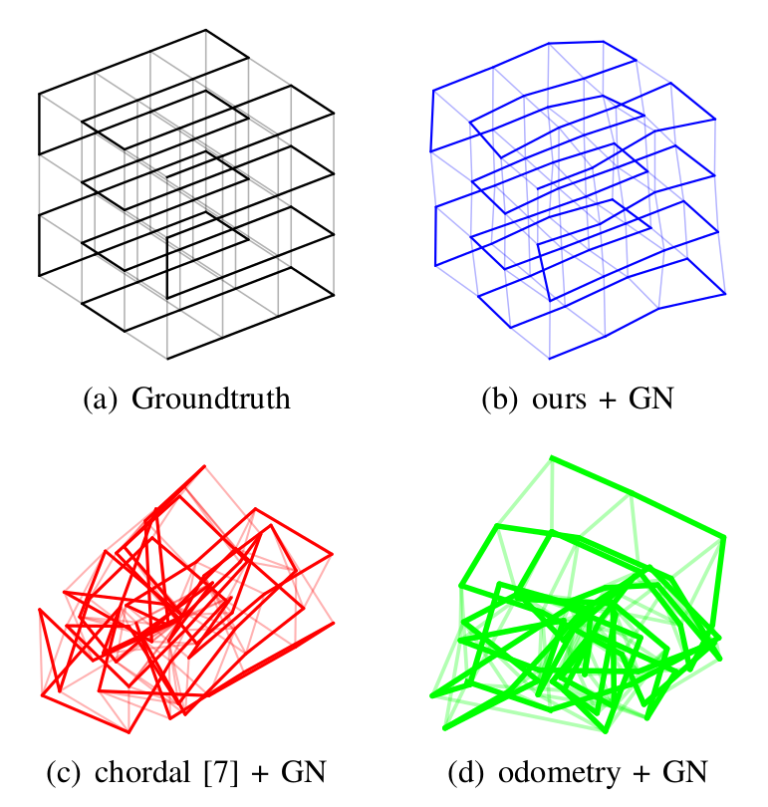

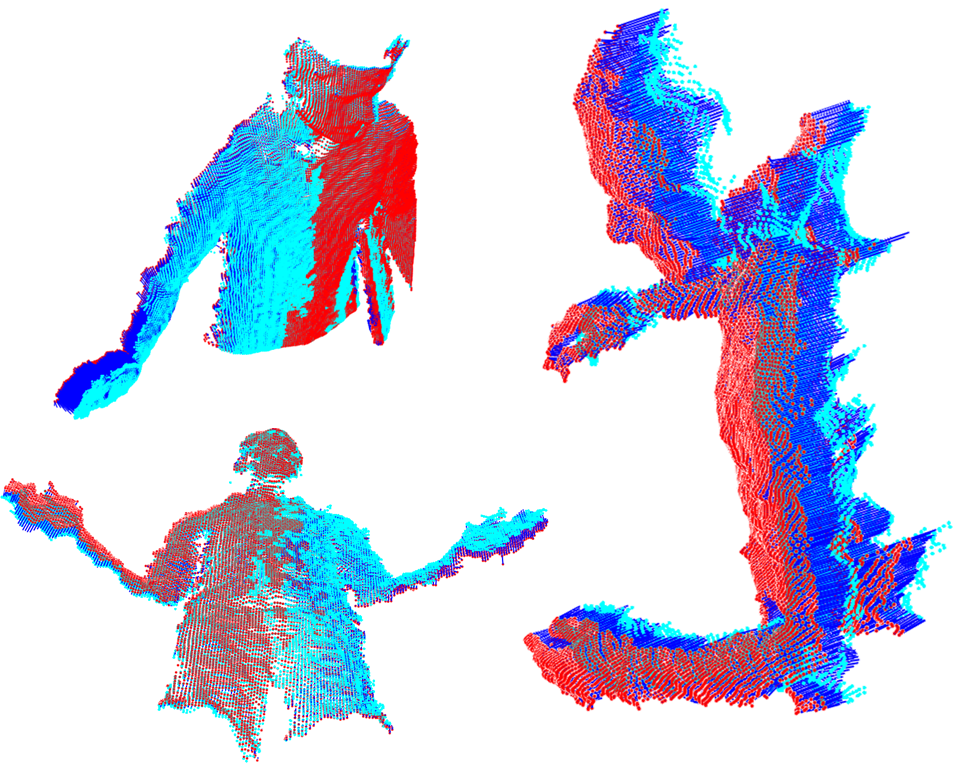

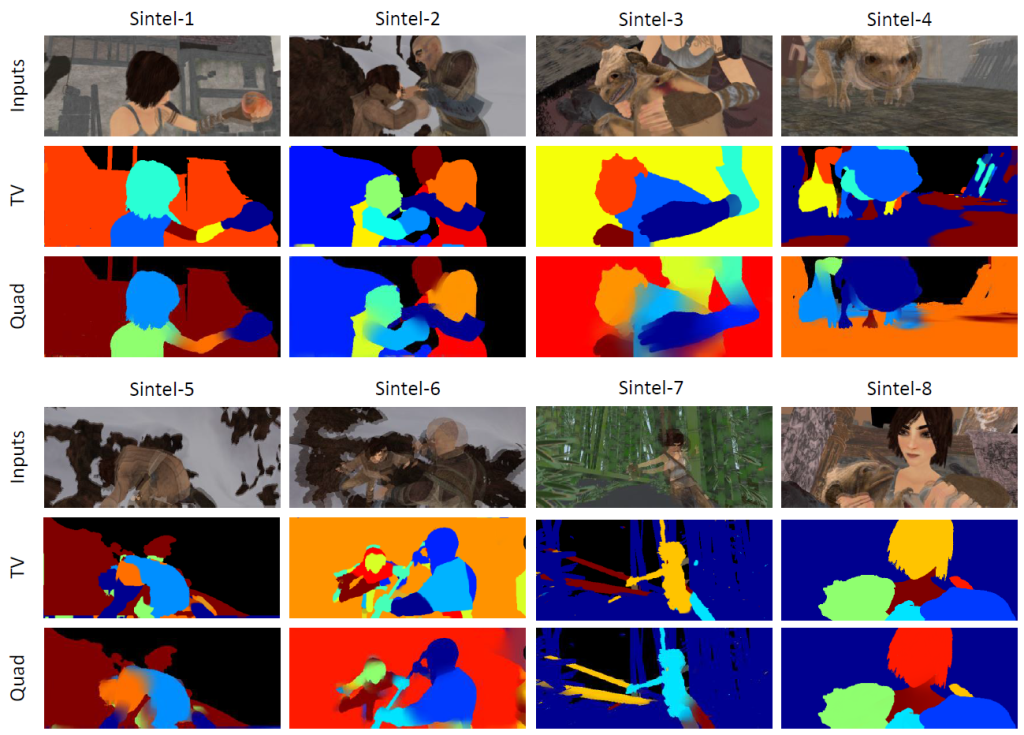

We have proposed a novel joint registration and segmentation approach to estimate scene flow from RGB-D images. Instead of assuming the scene to be composed of a number of independent rigidly-moving parts, we use non-binary labels to capture non-rigid deformations at transitions between the rigid parts of the scene. Thus, the velocity of any point can be computed as a linear combination (interpolation) of the estimated rigid motions, which provides better results than traditional sharp piecewise segmentations. Within a variational framework, the smooth segments of the scene and their corresponding rigid velocities are alternately refined until convergence. A K-means-based segmentation is employed as an initialization, and the number of regions is subsequently adapted during the optimization process to capture any arbitrary number of independently moving objects. We use a weighted quadratic regularization term to favor smooth non-binary labels, but also test our algorithm with weighted TV. This work was developed in conjuction with the CVPR group (TUM).

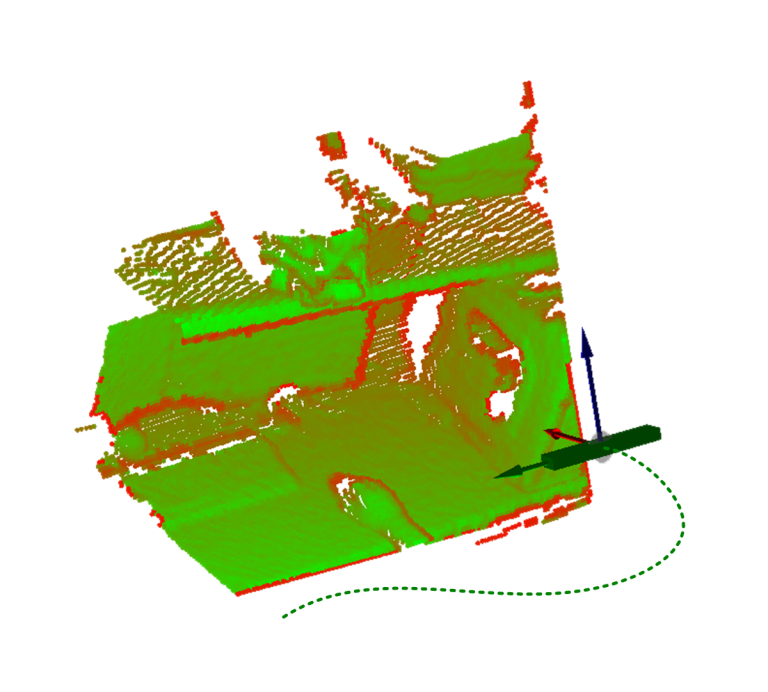

Here you can find the segmentations obtained for some sequences of the Sintel dataset, and below we show the 3D scene flow estimated for a moving person:

Publications

Here you can find a list of my publications with pdf author’s version and bibtex references: