Visual Odometry

Visual odometry (VO), also known as egomotion, is the process of estimating the trajectory of a camera within a rigid environment by analyzing a sequence of images.

In general, VO is employed in both mobile robotics and autonomous driving applications since it overcomes the drawbacks presented by traditional solutions as wheel odometry, IMUs or GPS-based systems. Other applications include augmented reality and virtual reality, which often employ VO algorithms to localize the virtual devices. Here, we address the visual odometry problem from several perspectives by employing different sensors:

Monocular Cameras

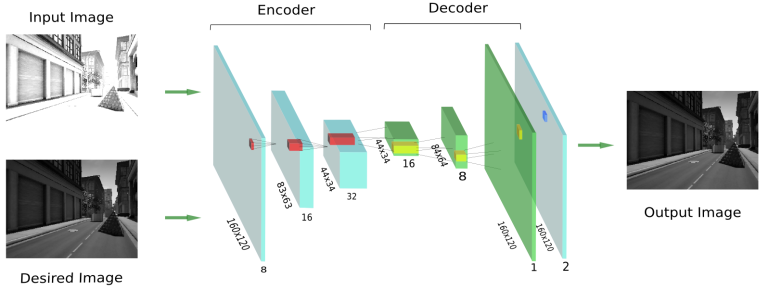

PL-SVO: In this work, we extend a popular semi-direct approach to monocular visual odometry known as SVO to work with line segments, hence obtaining a more robust system capable of dealing with both textured and structured environments. The proposed odometry system allows for the fast tracking of line segments since it eliminates the necessity of continuously extracting and matching features between subsequent frames. The method, of course, has a higher computational burden than the original SVO, but it still runs with frequencies of 60Hz on a personal computer while performing robustly in a wider variety of scenarios.

Stereo Cameras

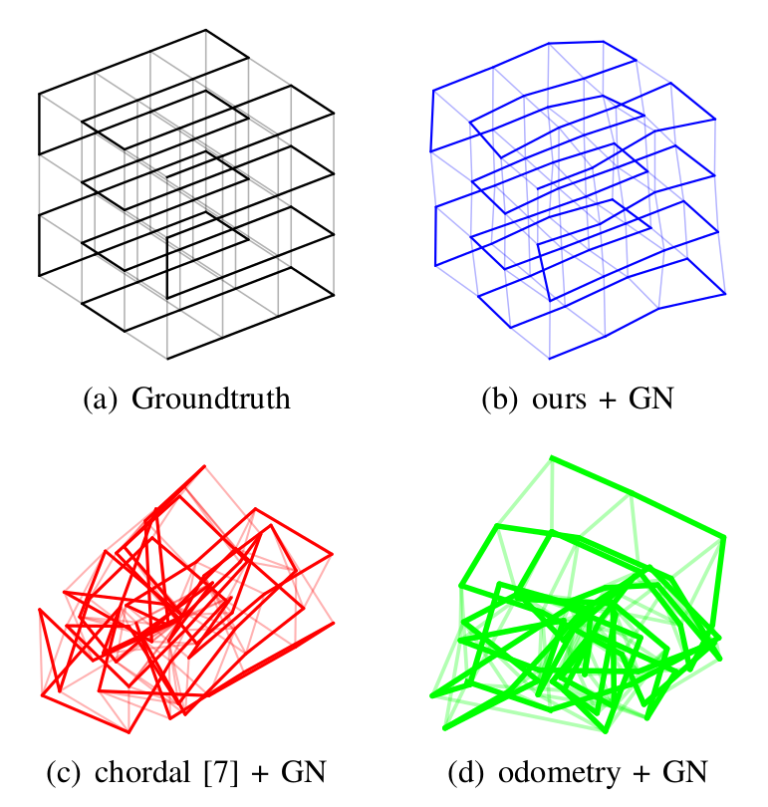

Stereo Visual Odometry with Points and Lines: A common strategy to stereo visual odometry (SVO), known as feature-based, tracks some relevant features (traditionally keypoints) in a sequence of stereo images, and then estimates the pose increment between those frames by imposing some rigid-body constraints between the features. However, in low-textured scenes it is often difficult to encounter a large set of point features, or it may happen that they are not well distributed over the image, so that the behavior of these point-based algorithms deteriorate. For that, we propose a probabilistic SVO algorithm based on the combination of both keypoints and line segments, since they provide complementary information, and hence capable of working in a wide variety of environments.

Range Sensors

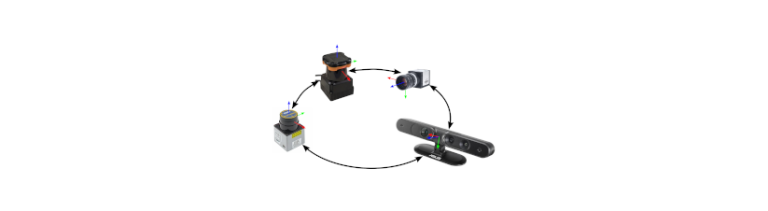

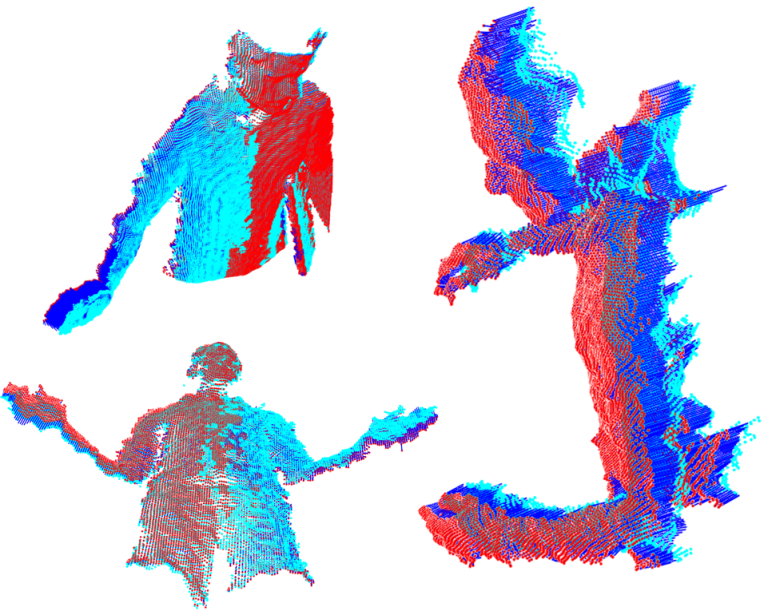

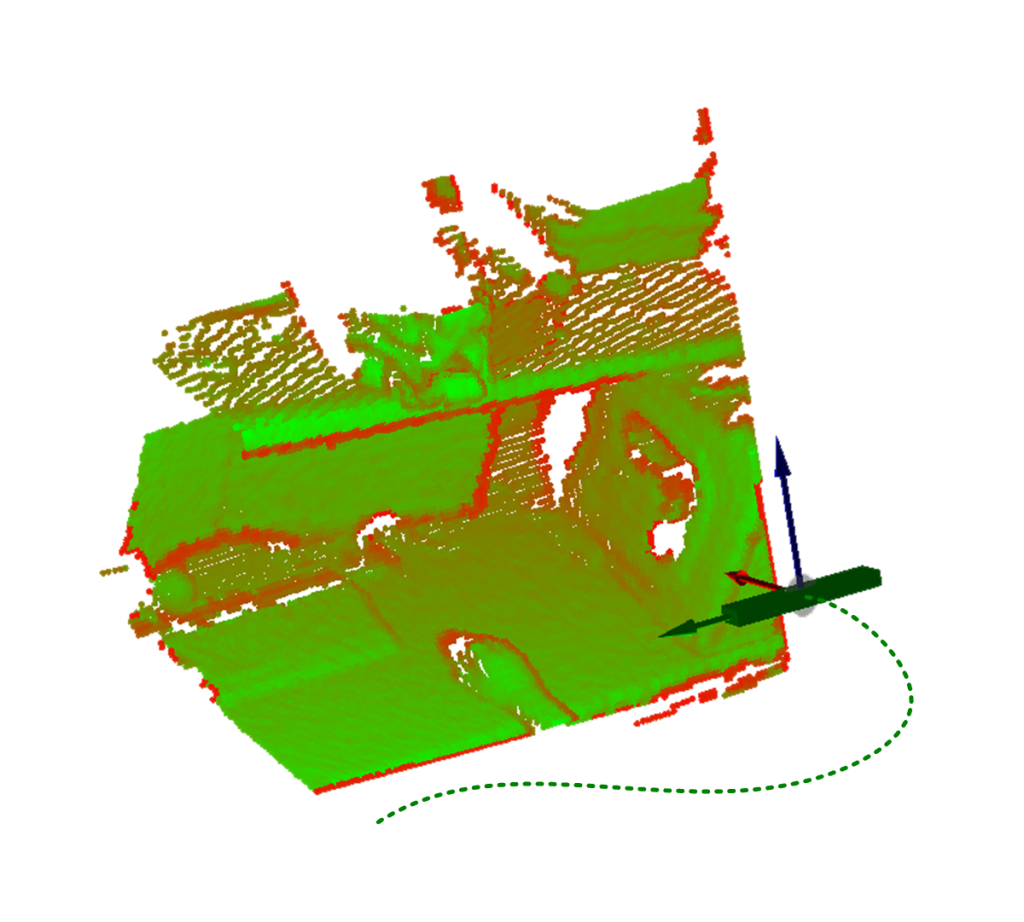

We have developed a new dense method to compute the odometry of a free-flying range sensor in real-time. The method applies the range flow constraint equation to sensed points in the temporal flow to derive the linear and angular velocity of the sensor in a rigid environment. A distinct feature of our method is that the same input data (range measurements) are exploited both to obtain the camera motion that minimizes the geometric error and to perform the warping within a coarse-to-fine scheme, which makes it applicable to any range sensor (not only to RGB-D cameras as other existing approaches). In particular, we have implemented and tested it for two different sensors: range cameras and laser scanners.

RF2O (Range Flow-based 2D Odometry for laser scanners): With a runtime of barely 1 millisecond, planar motion can be estimated with a negligible computational cost, which makes this method attractive for many robotic applications that are computationally demanding and might require real-time performance. Several experiments have been conducted to test the performance of our method, including qualitative and quantitative comparisons with wheel odometry, Point-to-Line ICP and the Polar Scan Matcher. Overall, our approach provides the most accurate results with the lowest runtime.

DIFODO (DIFferential ODOmetry for range cameras): It runs in real-time on a single CPU core. Comparisons have been performed with two state-of-the-art methods: Generalized-ICP and the RDVO. Experiments show that our approach clearly overperforms GICP which uses the same geometric input data, whereas it achieves similar results than RDVO, which requires both geometric and photometric data to work. Furthermore, experiments have been carried out to demonstrate that our approach is able to estimate fast motions precisely at 60 Hz running on a single CPU core (with QQVGA resolution).

Publications

Please refer to the following articles for further details: