Extrinsic Sensor Calibration

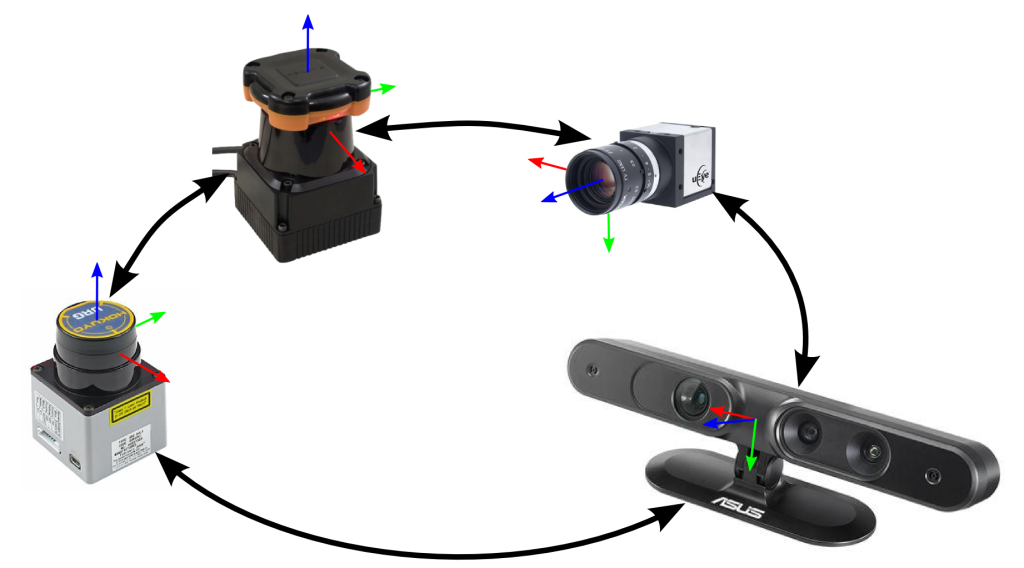

Extrinsic sensor calibration is the estimation of the relative transformation between different sensors. This is a key step for sensor fusion.

Mobile robots tend to accomodate numerous sensors. These sensors can be of different nature (e.g. cameras, RGB-D devices, laser rangefinders, etc.) and, individually, serve a wide range of different purposes.

The combination of the information resulting from separate sensors, also known as sensor fusion, boost the synergy of the sensory system and may improve input information or even enable new uses not possible with original data.

Since each sensor has its own coordinate system wrt which received data is described, a key step for sensor fusion is to perform an accurate and trustworthy extrinsic calibration between sensors, preferrably accompanied by an analysis of its uncertainty.

Contents

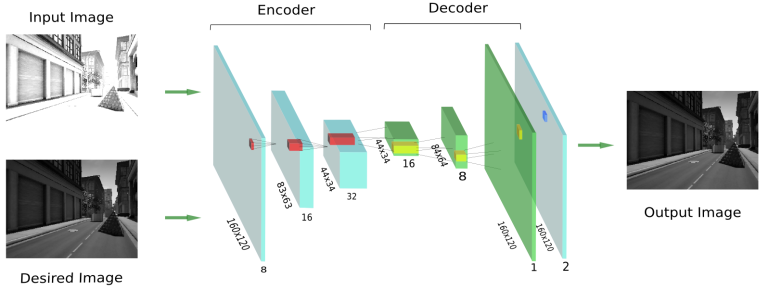

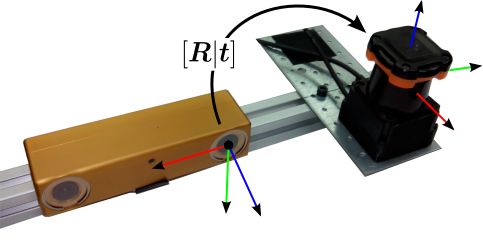

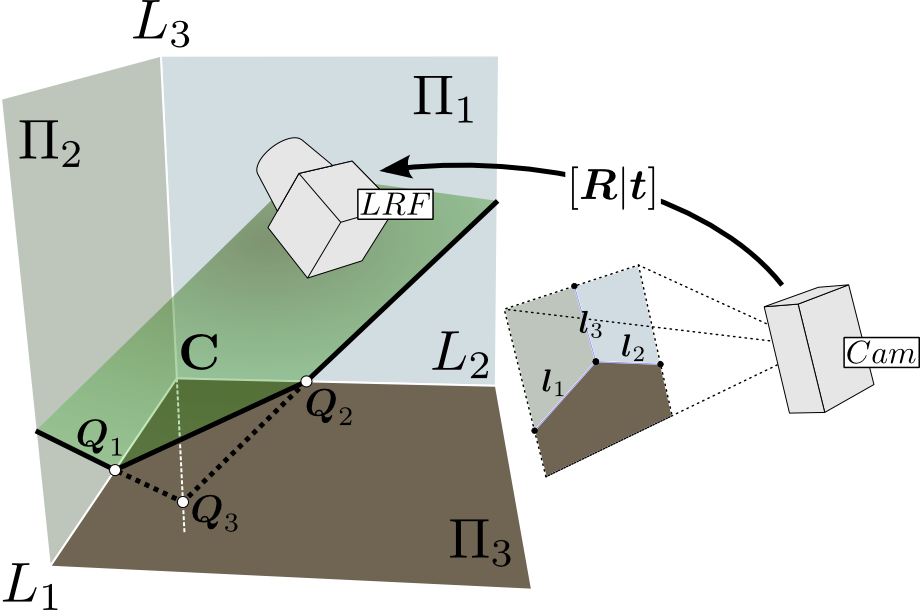

Camera – LRF calibration based on scene corners

Cameras and 2D laser-rangefinders (LRFs) are devices commonly used on robots and complement well to each other: Cameras provide projective data only, whereas 2D LRFs provide range measurements. The combination of these two sensors give raise to potential applications, such as

- pointcloud coloring

- extraction of semantic data

- improvement of visual odometry algorithms

The observation of an orthogonal trihedron, which can be profusely found as corners in human-made scenes, provides enough data to solve the calibration task without the need of any other specific calibration patern:

- From the observation of the trihedron, both line-to-plane and point-to-plane constraints can be imposed on the calibration parameters. The nature of constraints allows us to decouple rotation computation from that of translation.

- The treatment of extracted features and the computation of the necessary geometric data is suitable for automation.

- All captured data can be input in a Maximum Likelihood Estimation (MLE) optimization process, properly weighted accordingly to the data certainty

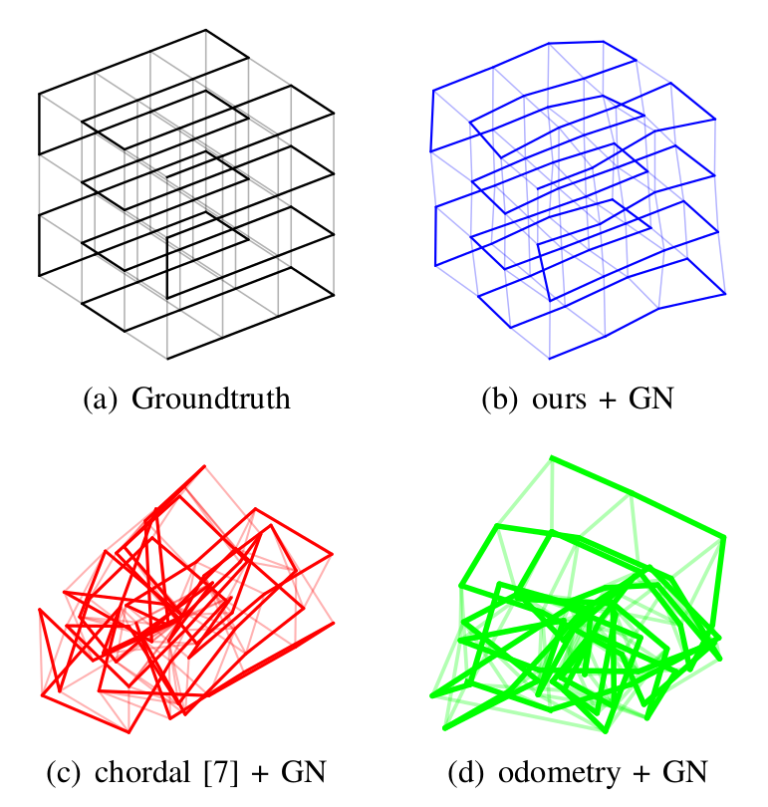

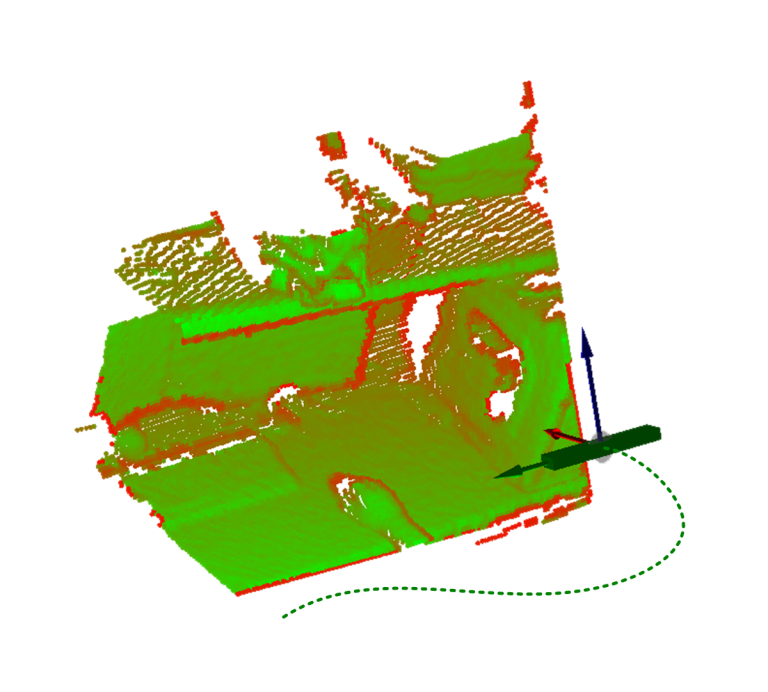

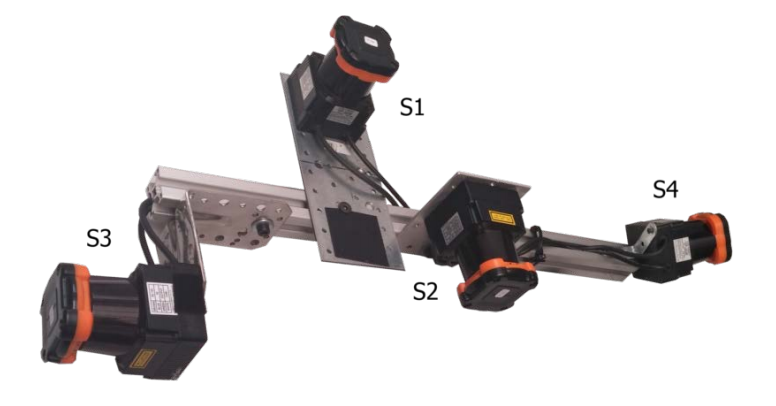

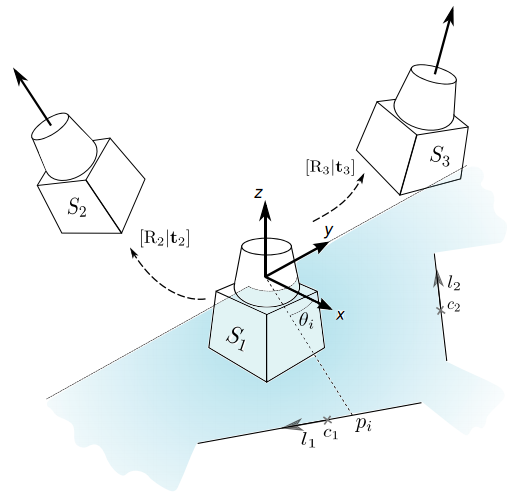

Extrinsic calibration of a set of 2D LRFs

The integration of several 2D laser rangefinders in a vehicle is a common resource employed for 3D mapping, obstacle detection and navigation. In this work we present a novel solution for the extrinsic calibration of a set of at least three laser scanners from the information provided by the sensor measurements. This method only requires the lasers to observe a common planar surface from different orientations, thus there is no need of any specific calibration pattern. This calibration technique can be used with almost any geometric sensor configuration (except for sensors scanning parallel planes), and constitutes a versatile solution that is accurate, fast and easy to apply.

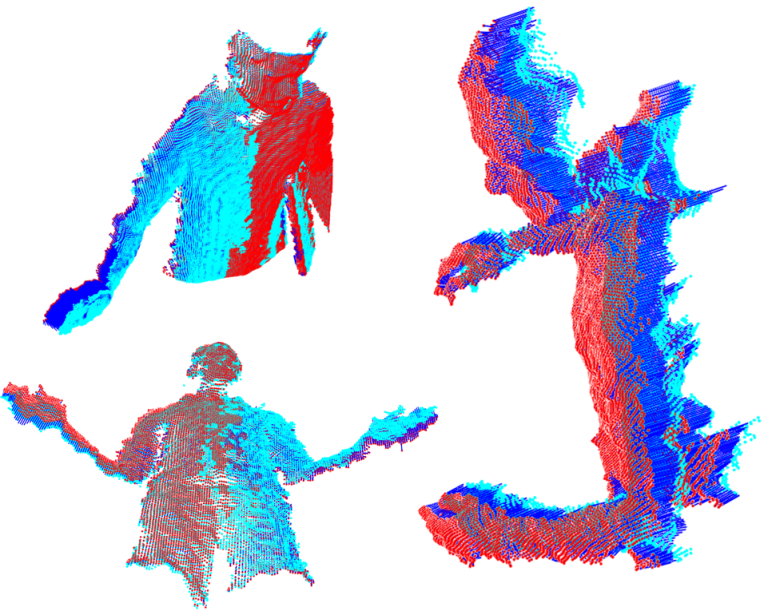

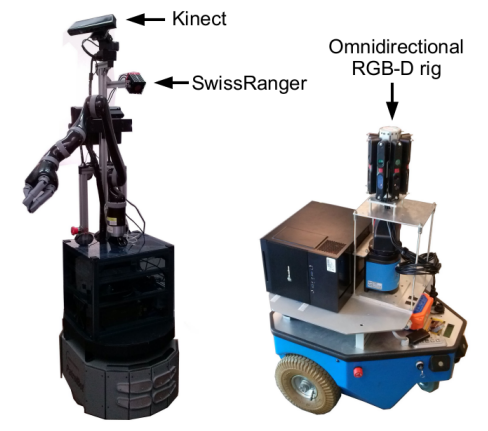

Extrinsic calibration of a set of range cameras

The integration of several range cameras in a mobile platform is useful for applications in mobile robotics and autonomous vehicles that require a large field of view.The method that we present serves to calibrate two or more range cameras in an arbitrary configuration, requiring only to observe one plane from different viewpoints. The conditions to solve the problem are studied, and several practical examples are presented covering different geometric configurations, including an omnidirectional RGBD sensor composed of 8 range cameras. The quality of this calibration is evaluated with several experiments that demonstrate an improvement of accuracy over design parameters, while providing a versatile solution that is extremely fast and easy to apply.

Publications

Please refer to the following articles for further details: