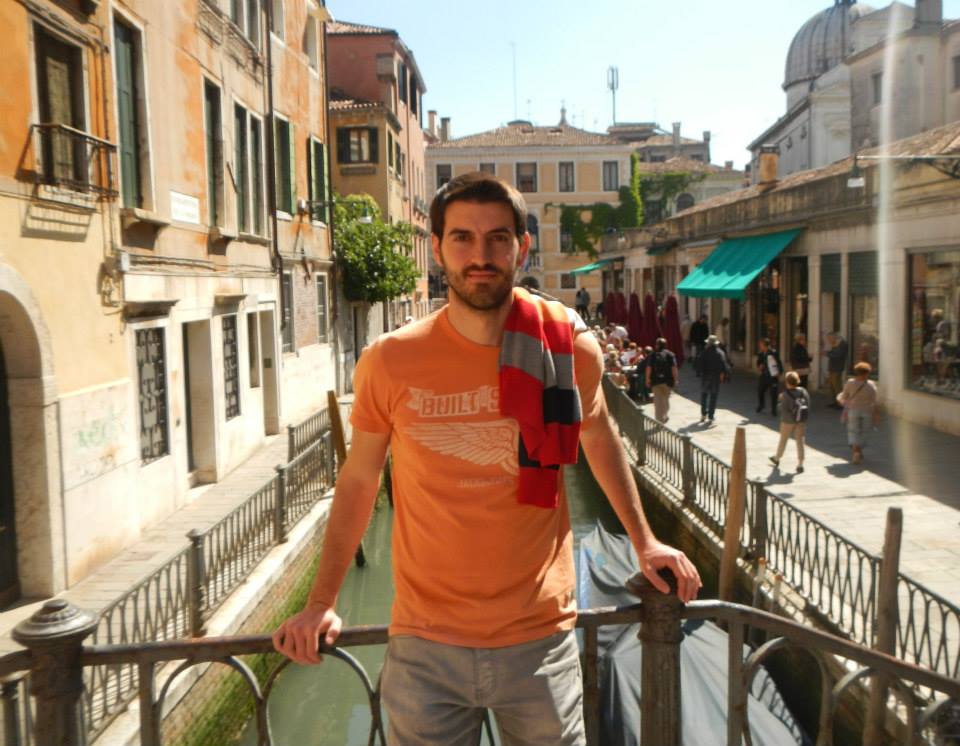

Dr. Mariano Jaimez Tarifa

Former member

Welcome!

I am Mariano Jaimez Tarifa, a joint PhD student in the Machine Perception and Intelligent Robotics group (MAPIR) at the University of Málaga (Spain) and the Computer Vision group at the Technical University of Munich (Germany). My research interests include:

- Computer Vision: Scene flow, visual odometry and tracking.

- Mobile robotics: Autonomous navigation.

- RGB-D cameras and their potential applications in the fields of robotics, computer vision and virtual & augmented reality.

Contents

Brief CV

I was born in Loja (Granada, Spain) in 1988. I received a B.Sc-M.Sc in “Ingeniería Industrial” (a very general engineering which covers mechanics, computer science, electronics, electricity, etc.) from the University of Málaga in 2010 with highest honor. I also got a M.Sc in Mechatronics in 2012. I received a grant (DPI2011-25483) from the National (Spanish) Plan of Research to do a 4-year PhD under the supervision of Prof. Javier González-Jiménez, which I started in January 2013. From March to July 2014 I was a guest researcher at the Computer Vision group of the Technical University of Munich, and in February 2015 I became a PhD student at the same University, pursuing a joint doctorate, under the supervision of Prof. Daniel Cremers. I also visited the MIP lab in Microsoft Research Cambridge, where I worked under the supervision of Dr. Andrew Fitzgibbon (October – November 2015).

Research

Scene Flow

Scene flow is defined as the dense or semi-dense motion field of a scene between different instants of time with respect to a static or moving camera. The potential applications of scene flow in the field of robotics are numerous: autonomous navigation and manipulation in dynamic environments, pose estimation or SLAM refinement, human-robot interaction or segmentation from motion are a few examples. Moreover, its usefulness goes beyond robotics and it can even be applied for human motion analysis and motion capture, virtual and augmented reality or driving assistance.

PD-Flow: In this work we present the first dense real-time scene flow algorithm for RGB-D cameras. It aligns photometric and geometric data, and is implemented on GPU to achieve a high frame rate.

Code: PD-Flow (GitHub)

MC-Flow: Here we address the problem of joint segmentation and motion estimation. We propose a smooth piecewise segmentation strategy that provides more realistic results than traditional sharp segmentations.

Joint-VO-SF: We address the challenging problem of simultaneously estimating the camera motion (from static parts of the scene) and the scene flow for the moving objects

Code: Joint-VO-SF (GitHub)

Visual Odometry

Visual Odometry (VO) consists in estimating the pose of an agent (typically a camera) from visual inputs. Nowadays, fast and accurate visual odometry is gaining importance in robotics over traditional solutions like wheel odometry or inertial navigation based on IMUs. In our work, we introduce a novel VO method that takes consecutive range images (or scans) to estimate the linear and angular velocity of a range sensor. Our method only requires geometric data and, although it could theoretically work with any kind of range sensor, we have particularized its formulation to depth cameras (DIFODO) and 2D laser scanners (RF2O and SRF-Odometry).

For depth cameras: It runs in real-time (30 Hz – 60 Hz) on a single CPU core.

For 2D laser scanners: It needs about 1 millisecond to be computed on a single CPU core.

Code: DIFODO (MRPT)

Code: RF2O (ROS)

3D Reconstruction and Tracking

Images used for object reconstruction or tracking normally observe not only the object to be modelled or tracked but also parts of the environment where this object is present. Therefore, their pixels must be segmented into two different categories: those from which the object to reconstruct is visible (often called foreground) and those which observe other objects of the scene (often referred to as background). The foreground pixels contain information that the 3D model must fit, be it colour, position, orientation, etc. The background pixels also impose the restriction that the model should not be visible from them. Our work focuses on this second type of constraints that try to keep the model within the visual hull of the object.

We present a new background term which formulates raycasting as a differentiable energy function. More precisely, this term addresses a min-max problem by first solving ray casting for the background pixels and then deforming the model so that the rays of the background pixels do not intersect it. Aside from that, we describe a complete framework for 3D reconstruction and tracking with subdivision surfaces, and show that the proposed background term can be easily combined with different data terms into an overall optimization problem.

Reactive navigation is a crucial component of nearly any mobile robot. It is one of two halves which, together with the path-planner, make up a navigation system according to the commonly used “hybrid architecture”. Within this scheme, a reactive navigator works at the low-level layer to guarantee safe and agile motions based on real-time sensor data. In our work we address the problem of planar navigation in indoor environments by a reactive navigation system which regards both the 3D shape of the robot and the 3D geometry of the environment. This navigator can be adapted to any wheeled robot, and has been extensively tested for years with different robotic platforms in varied and challenging scenarios.

Code: 3D PTG-Based Reactive Navigator (MRPT)

Publications

All Videos

- An Efficient Background Term for 3D Reconstruction and Tracking with Smooth Surface Models (2017)

- Fast Odometry and Scene Flow from RGB-D Cameras based on Geometric Clustering (2017)

- Robust Planar Odometry Based on Symmetric Range Flow and Multi-Scan Alignment (SRF) (2017)

- Planar odometry from a Radial Laser Scanner (RF2O) (2016)

- Motion Cooperation: Smooth Piece-wise Scene Flow from RGB-D Images (2015)

- Fast Visual Odometry for 3-D range sensors (2014)

- Real-Time Dense Scene Flow for RGB-D Cameras (2014)

- 3D PTG-Based Reactive Navigation: overview (2013)

- 3D PTG-Based Reactive Navigation: Going through a tight clearance (2013)

- Wheel-leg robotic module for hybrid locomotion (2011)

- Omnibola (2010)

Patents

Contact

Mariano Jaimez Tarifa

Dpto. Ingenieria de Sistemas y Automatica

E.T.S.I. Informatica – Telecomunicacion

Universidad de Malaga

Campus Universitario de Teatinos

29071 Malaga, Spain

Phone: +34 952 13 3362

e-mail: marianojt@uma.es